Online Operations Manual

Table of Contents

Features

Count Tracker

We want the background to be collected when the data is comprised of gaussian noise. However, since we don’t know beforehand when gravitational wave signals will occur, signals can often contaminate the background. Signals that are loud in one detector but quiet in others are particularly susceptible to this. Contamination from the same signal is often seen in multiple adjacent bins. The Count Tracker is a feature which helps to remove signals that have contaminated the background.

In online mode, whenever there’s a trigger with a FAR below the threshold, a GraceDB alert is created. When this happens, the Count Tracker creates a record of a subset of the background in a 10 second window around the alert. The record is in the form of counts, each corresponding to a particular SNR and chi bin, and the subset is defined as the part of the background with SNR > 6 and chi^2/SNR^2 < 0.04.

If we decide that the trigger was indeed a gravitational wave signal, we can use the gstlal_ll_inspiral_remove_counts software to tell the online job to remove counts from that time.

The job doesn’t modify the background PDF immediately, but when the finish() method is called, it subtracts the relevant counts (if any) from the background, ensuring that the modified PDF won’t be saved to disk.

To remove counts use the script auto_count_removal.sh present in o4b-containers repo main branch from your personal computer. Please ensure that the computer that can ssh into the shared accounts and you are using the latest script. The command to run from your personal computer is:

./auto_count_removal.sh <GPS Time to remove counts>

The known fixes for this script include:

- Modifying

-i <sshfile>to reflect the way the user logs in to the shared account - Adding the user’s public ssh key into the shared accounts

~/.ssh/authorized_key

If you can’t ssh in the shared account or like to do things in an elaborate way, log in the shared account where the analysis is running. To remove counts from gps-time 1234567890, use the singularity build in the config file and run

gstlal_ll_inspiral_remove_counts --action remove --gps-time 1234567890 *noninj*

To check whether gps-time 1234567890 has been submitted to a job for removal:

gstlal_ll_inspiral_remove_counts --action check --gps-time 1234567890 *noninj*

NOTE : currently, this check is a bit buggy and prints out the following message in the end, but you can ignore that for now.

Given files are all getting either warning or error. Please check if the gps time and the files are valid.

If a job doesn’t have that time stored, it will print out a statement. If nothing is printed, all jobs have that time stored.

In case you have to undo a count removal done in the past for some reason, e.g. retraction, you need to run the following command:

gstlal_ll_inspiral_remove_counts --action undo-remove --gps-time 1234567890 *noninj*

For O4, our strategy is to remove counts only if an event isn’t a retraction (check Qscans for that), and if the event passes the OPA threshold of 1/(10months).

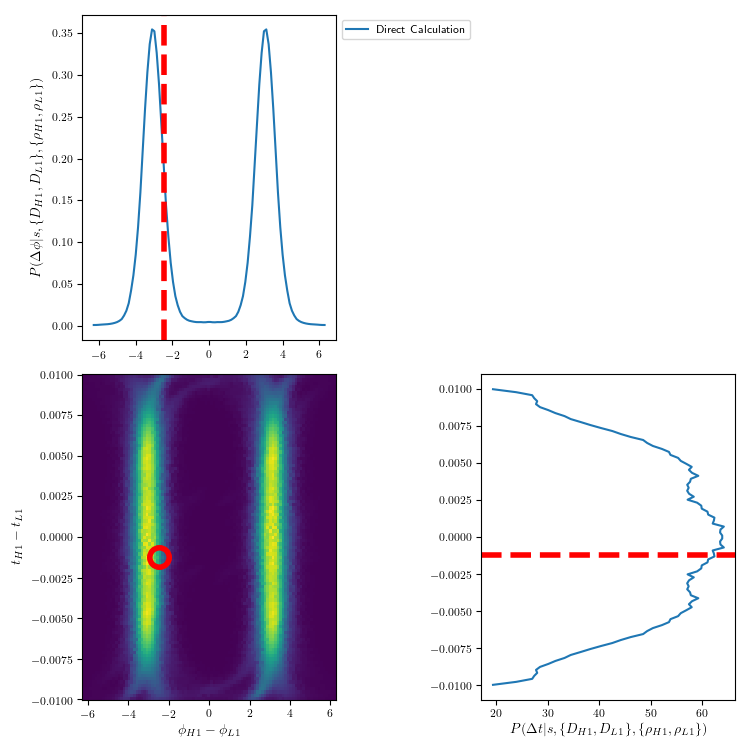

Here is an example of signal contamination and its consequent removal via the Count Tracker:

![]()

Finally, here’s a wiki page detailing Count Tracker operations during MDC04

LR term printer

Currently, only the final lnLR value is stored in GraceDB, but not the values of the individual components that make up the LR. In cases where one wants to know the values of the individual components of the LR for a certain trigger to know why that trigger was given a certain value of lnLR, take the following steps:

- Download the ranking stat file and coinc file from GraceDB and the population model file from the analysis that uploaded the trigger to your working dir.

- Check that the ranking stat file copied to your working dir points to the appropritate popoulation model file.

- Run

gstlal_inspiral_calc_likelihood --verbose-level "DEBUG" --force --copy --tmp-space /tmp --likelihood-url <path to ranking stat file> <path to coinc file>

- Open up the log file to see the values of the individual components in the LR.

MR 400 has an example of the output.

Rewhitening Templates

In our online analyses, the templates are pre-whitened with a reference PSD during the set up stage. Although the filtering jobs track the PSD continuously, the templates are not re-whitened on the fly. As the noise characteristics of the data change over time this can lead to SNR loss. For this reason, we will measure a new reference PSD using the previous week of data every week during maintenance. Then, we can re-whiten all of our templates using this updated PSD. For consistency, we’ll use the same reference PSD across all analyses so we only need to generate the PSD each week for the Charlie-CIT and Rick analyses and then we will copy this to use in the other analyses (Early Warning, Charlie-ICDS, Bob, etc).

Instructions

- Pull rewhitening container :

The container contains configuration files for each analysis. It only needs to be re-built when new changes are introduced to the rewhiten branch of o4b-containers. Use the latest git commit hash from the rewhiten branch of the o4b-containers repo in the name of the build so that it is clear which version of code was used.

ssh gstlalcbc.offline@ldas-pcdev5.ligo.caltech.edu

cd /home/gstlalcbc.offline/observing/4/c/builds/

singularity build --fix-perms --sandbox rewhiten_<git_hash> docker://containers.ligo.org/gstlal/o4b-containers:rewhiten

sed -i 's/GITHASH/<git_hash>/g' rewhiten_<git_hash>/online-analysis/Makefile

cp rewhiten_<git_hash>/online-analysis/Makefile /home/gstlalcbc.offline/observing/4/c/rewhiten/Makefile

- Make and launch the PSD dags

From the gstlalcbc.offline account at CIT

ssh gstlalcbc.offline@ldas-pcdev5.ligo.caltech.edu

cd /home/gstlalcbc.offline/observing/4/c/rewhiten/

make user=<albert.einstein> submit_psd_dag

You’ll be asked to enter your username and password to renew the proxy. This step will launch the PSD dags. Move to the next step after the dags have finished. (Typically takes less than 30 minutes.)

- Make and launch the SVD dag:

Upon completion of the PSD dags run the following, from the gstlalcbc.offline account at CIT.

ssh gstlalcbc.offline@ldas-pcdev5.ligo.caltech.edu

cd /home/gstlalcbc.offline/observing/4/c/rewhiten/

make user=<albert.einstein> submit_svd_dag

This step submits 5 dags.

- Copy the SVDs to CIT, ICDS and NEMO:

This step has to be done upon completion of the SVD dags from your personal account at CIT.

ssh -A <albert.einstein>@ldas-pcdev5.ligo.caltech.edu

make user=<albert.einstein> copy -f /home/gstlalcbc.offline/observing/4/c/rewhiten/Makefile

The Final Step is to be done during the CIT maintenance periods on Tuesday

- Update files in the low-latency run directories

(a) for analysis on CIT, the DAGs are taken down during maintenace. From gstlalcbc.online account at CIT run

ssh gstlalcbc.online@ldas-pcdev5.ligo.caltech.edu

cd /home/gstlalcbc.offline/observing/4/c/rewhiten/

make user=<albert.einstein> swap_cit

Once maintenance is over relaunch the analyses. Please launch the analyses from the gstlal headnode.

NOTE : If detectors have been down for a week, please comment out the mv commands in the Makefile.update_files. This will refer to the PSDs for the detector from the time it was last online.

(b) for analyses at ICDS and NEMO take down the analysis DAG by condor_rm from the respective clusters. Remember to take down the test-suite for Rick and Bob and also launch them back test-suite by running cd test-suite && make launch && cd ../..

ssh <ICDS/NEMO>

cd <analysis-run-dir>/.

condor_rm <analysis-dag-id>

make <ICDS/NEMO-analysis-name> -f Makefile.update_files

NOTE : If detectors have been down for a week, please comment out the mv commands in the Makefile.update_files. This will refer to the PSDs for the detector from the time it was last online. Do NOT run the rm commands in this case as well.

Generate dtdphi plots

(You can make your container with the o4a-online-dtdphi-fix branch into your local gstlal build in CIT and make install)

For quickly generating plots, replace <build-dir> with /home/shomik.adhicary/Projects/2023_Paper_LR/gstlal-dev_231023 in the commands below

- Go to Edward’s run directory. (otherwise rankingstat loading would crash while running the program)

/home/gstlalcbc.online/observing/4/a/runs/trigs.edward_o4a/

- Run the following command

singularity exec -B /home <build-dir>

gstlal_inspiral_lvalert_dtdphi_plotter --no-upload --output-dir /path/to/output/dir/ --add-subthresh --verbose <GID>

Make sure to include --no-upload and note that --add-subthresh includes sub-threshold triggers whose SNR<4. Then you will see a plot as follows.